Introduction¶

This notebook presents Many-to-Many architecture based on Bidirectional LSTM cells. Neural network is used to learn English to French translation task on a small corpus of sequences.

Dataset

- Udacity NLP Nanodegree - I found dataset as part of the course

- Udacity NLP GitHub - dataset link

Resources

- Bidirectional Recurrent Neural Networks (1997) by Mike Schuster and kuldip K. Paliwal

Imports¶

import os

import numpy as np

import matplotlib.pyplot as plt

Limit TensorFlow GPU memory usage

import tensorflow as tf

gpu_options = tf.GPUOptions(allow_growth=True) # init TF ...

config=tf.ConfigProto(gpu_options=gpu_options) # w/o taking ...

with tf.Session(config=config): pass # all GPU memory

English to French Dataset¶

Download dataset from the link in the introduction and point path below to folder with small_vocab_en and small_vocab_fr

dataset_location = '/home/marcin/Dropbox/Courses/Udacity/NLPND/aind2-nlp-capstone/data/'

small_vocab_en contains approx 137860 short sentences in English. small_vocab_fr contains corresponding sentences in french.

with open(os.path.join(dataset_location, 'small_vocab_en')) as f:

# line below: 1) reads lines from file,

# 2) strips /n char and converts to lowercase,

# 3) adds special start/end words

data_en_raw = list(map(lambda x: 'ST '+x.strip().lower()+' EN', f.readlines()))

print('len:', len(data_en_raw))

print('example sentences:')

data_en_raw[4:7]

with open(os.path.join(dataset_location, 'small_vocab_fr')) as f:

# line below: 1) reads lines from file,

# 2) strips /n char and converts to lowercase,

# 3) adds special start/end words

data_fr_raw = list(map(lambda x: 'ST '+x.strip().lower()+' EN', f.readlines()))

print('len:', len(data_fr_raw))

print('example sentences:')

data_fr_raw[4:7]

Use Keras tokenizer to convert text sentences to tokens. Each word gets it's own unique integer token. Special words ST/EN also get their tokens.

tok_en = tf.keras.preprocessing.text.Tokenizer(lower=False)

tok_en.fit_on_texts(data_en_raw)

data_en_tok = tok_en.texts_to_sequences(data_en_raw)

print('example tokens for English:')

print('is:', tok_en.word_index['is'], ' ',

'ST:', tok_en.word_index['ST'], ' ',

'EN:', tok_en.word_index['EN'], ' ',

'in:', tok_en.word_index['in'], ' ',

'it:', tok_en.word_index['it'])

print('example sentences after tokenization:')

data_en_tok[4:7]

tok_fr = tf.keras.preprocessing.text.Tokenizer(lower=False)

tok_fr.fit_on_texts(data_fr_raw)

data_fr_tok = tok_fr.texts_to_sequences(data_fr_raw)

print('example tokens for French:')

print('est:', tok_fr.word_index['est'], ' ',

'ST:', tok_fr.word_index['ST'], ' ',

'EN:', tok_fr.word_index['EN'], ' ',

'en:', tok_fr.word_index['en'], ' ',

'il:', tok_fr.word_index['il'])

print('example sentences after tokenization:')

data_fr_tok[4:7]

Calculate maximum sentence lengths

max_len_en = len(max(data_en_tok, key=len))

max_len_fr = len(max(data_fr_tok, key=len))

max_len_both = max(max_len_en, max_len_fr)

print('Max length English sentence (tokens): ', max_len_en)

print('Max length French sentence (tokens): ', max_len_fr)

print('Max length in either English or French:', max_len_both, 'tokens (including EN/ST)')

Pad both corpuses to longest sentence - input and output lenghts need to match

data_en = tf.keras.preprocessing.sequence.pad_sequences(

data_en_tok, maxlen=max_len_both, padding='post')

data_fr = tf.keras.preprocessing.sequence.pad_sequences(

data_fr_tok, maxlen=max_len_both, padding='post')

Print some statistics

n_en_seq = data_en.shape[1]

n_fr_seq = data_fr.shape[1]

n_en_vocab = len(tok_en.word_index)

n_fr_vocab = len(tok_fr.word_index)

max_seq_len = max(n_en_seq, n_fr_seq)

print('English sequence length (tokens): ', n_en_seq)

print('French sequence length (tokens): ', n_fr_seq)

print('Num tokens in English vocabulary: ', n_en_vocab)

print('Num tokens in English vocabulary: ', n_fr_vocab)

print('English train data')

print('shape:', data_en.shape)

print(data_en[4:7])

print('French train targets data')

print('shape:', data_fr.shape)

print(data_fr[4:7])

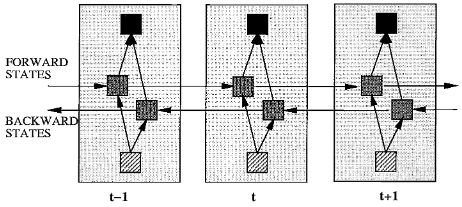

Bidirectional LSTM¶

from tensorflow.keras.layers import Input, Embedding, Bidirectional, GRU

from tensorflow.keras.layers import TimeDistributed, Dense, Activation

Create Keras model

X_input = Input(shape=(n_en_seq,)) # (?, 23)

X_embed = Embedding(input_dim=n_en_vocab, output_dim=50)(X_input) # (?, 23, 50)

X_bidir = Bidirectional( GRU(units=64, return_sequences=True) )(X_embed) # (?, 23, 128)

X_td = TimeDistributed(Dense(units=n_fr_vocab))(X_bidir) # (?, 23, 346)

X_act = Activation('softmax')(X_td) # (?, 23, 346)

model = tf.keras.Model(inputs=X_input, outputs=X_act)

model.compile(loss=tf.keras.losses.sparse_categorical_crossentropy,

optimizer=tf.keras.optimizers.Adam(lr=0.001),

metrics=[tf.keras.metrics.sparse_categorical_accuracy])

model.summary()

Optional: plot nice diagram and save to file. This requires graphviz and pydot to be installed.

# from tensorflow.keras.utils import plot_model

# plot_model(model, to_file='model.png', show_shapes=True, show_layer_names=True)

The result should be as follows:

Train model

hist = model.fit(x=data_en, y=np.expand_dims(data_fr, axis=-1),

batch_size=1024, epochs=10, validation_split=0.2)

Test Model

Helper function to convert tokenized sentence back to words

def sequence_to_french(seq):

words = [tok_fr.index_word[x] for x in seq if x in tok_fr.index_word]

return ' '.join(words)

index = 234

english_sentence = data_en_raw[index]

french_sentence = data_fr_raw[index]

prediction_prob = model.predict(data_en[index:index+1])

prediction_prob = prediction_prob.squeeze()

prediction_tok = prediction_prob.argmax(axis=-1)

predicted_sentence = sequence_to_french(prediction_tok)

print('english: ', english_sentence)

print('french (original): ', french_sentence)

print('french (predicted): ', predicted_sentence)