Software Projects

-

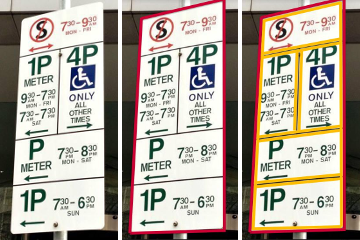

Parking sign detection and recognition

Parking sign detection and recognitionDescription: Parking signs in Australia are notoriously complicated. The project was to build a proof-of-concept vision system to detect and recognise a signs from mobile phone and deliver verdict to user whether they can park at the location.

Technology: The system uses customised state-of-the-art multi-stage neural network for detection. The main challenge was working with tiny data set and large variety of backgrounds, lighting and weather conditions and obstructions. System was deployed for production on Amazon EC2

This project won first place and monetary prize $20,000 at the Melbourne Knowledge Week. I was fully responsible for AI technology, the demo was delivered by the customer.

-

Automatic text summarization of financial news articles

Automatic text summarization of financial news articlesDescription:

Stock traders need to process large quantity of complex information in real time to be able to compete. The project was to analyse input PDF and present to the user a short 2-3 sentence summary.Technology:

During the research phase we experimented with both extractive and abstractive text summarization, sentiment analysis and translation techniques to get all the pieces necessary for the final product. Decoding raw PDFs was a significant challenge as well. -

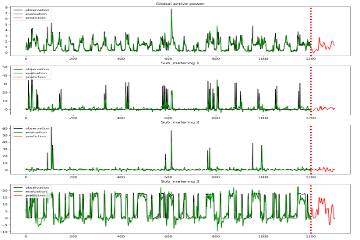

Time series analysis and prediction for housing occupancy

Time series analysis and prediction for housing occupancyDescription:

Smart home and IoT technologies offer huge potential savings in heating, power, air conditioning and predictive maintenance. Combining sensor data form thousands of properties we were able to identify actionable insights for occupants and landlords.Technology:

Houses were equipped with variety of sensors like power, temperature, humidity, CO2. We used variety of models like ARIMA, Gaussian processes, neural networks to detect latent variables (e.g. occupancy status), predict behavioural patterns (e.g. heating requirements) and risk of issues (e.g. hidden mould). -

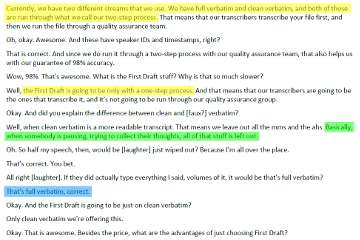

NLP Data Mining and Analysis of Call Center Transcripts

NLP Data Mining and Analysis of Call Center TranscriptsDescription:

I needed to analyze a database of transcripts in a deeper language context and identify advisory skill sets and their applied sales strategies (e.g., up-selling and cross-selling) for training purposes.Technology:

I identified key phrases using both metadata and BERT-based deep learning architecture, a popular choice for language modeling and analysis. -

Automated wind turbine fault detection

Automated wind turbine fault detectionDescription:

This project is a proof-of-concept neural architecture for automated fault detection on high resolution images of wind turbine blades. I provided know-how via ongoing consulting as well as developed actual software for data pre-processing and model training and testing. The customer provided images and preliminary labels in a raw format. Large part of the project was to browse, clean, select and label data provided so it can be feed into machine learning component.Technology:

Due to unique technical challenges encountered, two non-standard components were developed:

1) a completely custom pre-processing pipeline to deal characteristics of input data

2) far-going optimisation of neural network subsystem to allow for fast training time. -

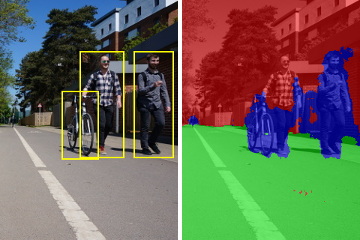

Self-driving software for Semi-Autonomous Unmanned Ground Vehicle

Self-driving software for Semi-Autonomous Unmanned Ground VehicleDescription: In this project I was a principal software developer responsible for designing and implementing self-driving module for an Unmanned Ground Vehicle (UGV). Robot would navigate over GPS over predetermined routes, where obstacle avoidance module would be responsible for detecting unexpected obstacles like pedestrians, parked vehicles and similar (robot would use walking paths).

Technology: Primary sensor was front facing monocular RGB camera (aka webcam). Images from camera were processed by two independent neural networks: 1) YOLO for object detection and localisation - pedestrians, parked vehicles etc. Network was trained on both pre-existing dataset as well as mix-in of our own training data. 2) SegNet for detecting if ground in front of vehicle is derivable (pavement, tarmac). Network was pre-trained on CityScapes with few added images.

-

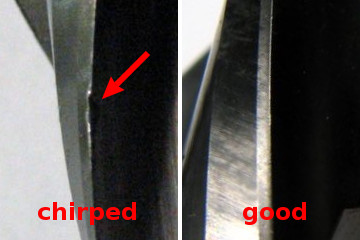

Tool wear assessment for CNC router

Tool wear assessment for CNC routerDescription: CNC Router is a machine which uses rotational tool bit (drill) to remove material from solid block to manufacture a target part. Tool bits wear down and technicians often forget to check and replace them. This system automatically takes picture of tool bit before job is started and feeds it into convolutional neural network to classify current wear of the tool bit.

Technology: Image classification is performed with DenseNet-201 which showed best performance out of tried architectures. CNN was pre-trained on ImageNet and trained any further. Classifier was trained on target dataset with heavy data augmentation and regularisation applied. After training network was able to detect cracked, chirped or overheated (changed color) tool bits.

System is operating in a machine shop at the aerospace manufacturing facility.

-

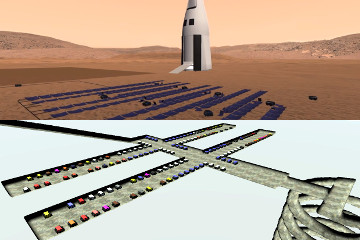

Interactive simulation for mining robot swarm

Interactive simulation for mining robot swarmDescription: The purpose of this project was to assess in detail performance of the swarm of 200+ mining and support robots. Swarm would start in containers, then deploy solar panels, build surface base, excavate tunnels, process raw materials and finally wind up operation. The complexity of the project was similar to simple strategy video game.

Technology: Simulation is physics-based, build in Unity3D game engine along with set of plugins for dynamic volumetric terrain (so robots can freely excavate). Individual robot AI manages energy, navigation, task queues etc. Swarm AI manages task allocation, robot coordination, excavation orders and similar.

At any point user can override high-level strategy or take over full control over individual robot.

Drone Projects

-

Drone for spraying coatings on building walls Aspira Aerial Applications

Drone for spraying coatings on building walls Aspira Aerial ApplicationsI lead engineering effort to design both software and hardware and to build and test this wall-spraying drone. Drone is equipped with advanced control system to make it possible to operate at very close proximity to the building, while still giving pilot ability to operate with confidence and precision. To the best of my knowledge at the time of writing (early 2019) this is the only system of its kind in existence.

This project had substantial research component - literature review did not return feasible answers. Work required systematic experimentation and deep understanding. In the end we solved all the engineering problems and we did it in a way that is practical, cost effective and easy to use.

The customer holds CAA exepmtion and is in the process of commercialising the operation.

-

Industrial Drone for 3D laser scanning

Industrial Drone for 3D laser scanningI was lead software and hardware on this project. Drone purpose is to make highly detailed 3D scans of industrial sites. Subject to operating conditions drone was able to achieve 5-10cm precision on raw pointcloud. This is better than industry standard at the time (2018).

Drone is equipped with RTK GPS for positioning, high-end IMU and Lidar. Onboard computer is used for data recording. Custom software for 3D pointcloud synthesis was written from scratch.

The drone was deployed to scan jetty structure at Hinkley Point C nuclear power station building site, which was the largest build site in the UK at the itme.

-

Swarming Drone • Lead the effort to design and build hardware base for swarming drone for forestry search and rescue missions. • Integrated dual flight control system based on Robot Operating System (ROS)

• Includes RTK GPS positioning -

4G Sentry Drone • Designed and built prototype drone for remote location monitoring. Drone operated via 4G network (no pilot on site)

• Integrated proof-of-concept collision avoidance system and demonstrated feasibility for fully autonomous operation -

Autonomous Underwater Vehicle • Lead the team of engineers to deliver multiple successful customer facing trials.

• Responsible for multiple software and hardware systems integration on an experimental autonomous deep-water vehicle.