Intro to Neural Networks

Simple implementations of basic neural networks in both Keras and PyTorch

NumPy Implementations

Quick implementation of basic neural network building blocks in pure NumPy

-

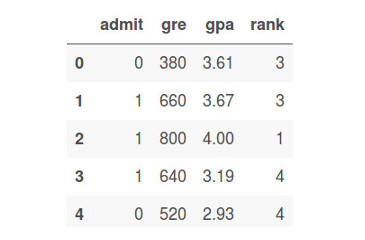

Perceptron - Classification Single-Layer Perceptron used to solve binary classification task. Trained on college admission dataset.

Code: NumPy

-

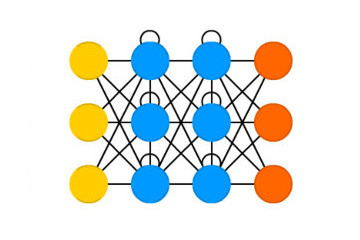

Feedforward - Regression Feedforward Multi-Layer Perceptron used to solve regression task. Trained on bike-rental dataset.

Code: NumPy

-

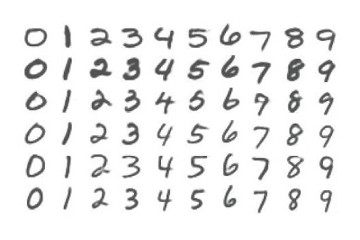

Feedforward - Classification Feedforward Multi-Layer Perceptron used to solve image classification task. Trained on Fashion-MNIST dataset.

Code: NumPy

-

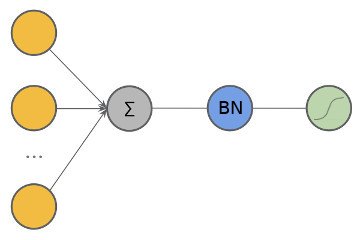

RNN - Counter Recurrent Neural Network used as a counter. This RNN has many-to-one arrangement. Dataset is synthetic. Both unfolded and recurrent implementations.

Code not tidied.Code: NumPy

-

RNN - Text Generation Character-level Recurrent Neural Network used to generate novel text. This RNN has many-to-many arrangement. Dataset is composed of 300 dinosaur names.

Code not tidied.Code: NumPy

Computer Vision

Vision Basics

-

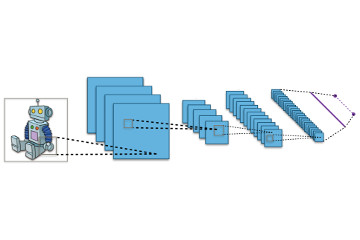

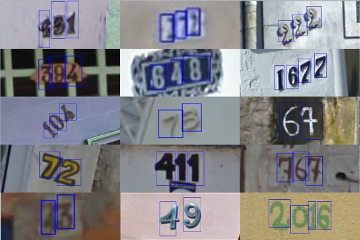

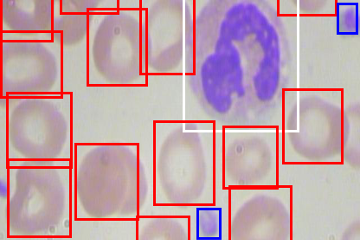

Convolutional Network Small convolutional neural network used for image classification on the CIFAR-10 dataset. Images are RGB 32x32 pixels.

Code: Keras

-

Data Augmentation Small convnet with data augmentation to reduce overfitting on CIFAR-10 dataset.

Code: Keras

-

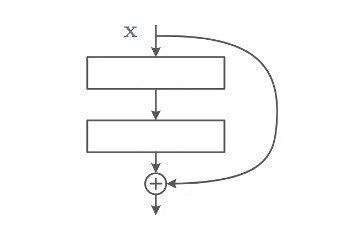

ResNet from Scratch ResNet-50 implemented from scratch using Keras functional API. Then trained from scratch on Oxford VGG Flowers 17 dataset.

Code: Keras

-

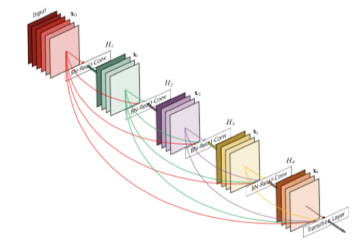

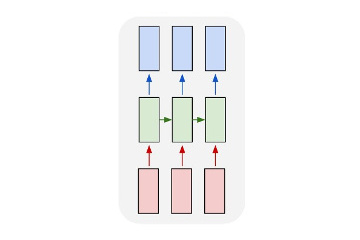

Bottleneck Features Pre-trained DenseNet-201 is further trained on Oxford VGG Flowers 102 dataset. Bottleneck features are extracted and only classifier is trained.

Code: PyTorch

Mini Projects

Natural Language Processing

NLP Basics

-

Time Series Prediction Vanilla RNN trained to perform time series prediction on sine wave.

Code: PyTorch

-

Text Generation Char-level LSTM trained to generate new text trained on Shakespeare plays.

Code: PyTorch

-

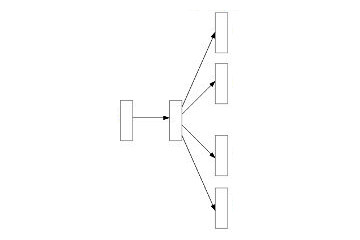

Word2Vec Skipgram Example how to train embedding layer using Word2Vec Skipgram. Trained on popular wiki8 dataset

Code: PyTorch

Mini Projects

-

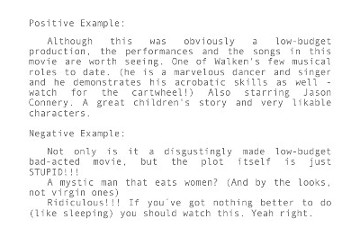

Sentiment Analysis Word-level LSTM trained to generate predict if movie review is positive or negative. Uses IMDB Movie Reviews dataset

Code: PyTorch

-

Language Translation Two models are trained to perform English to French translation.

• Many-to-Many (m2m)

• Sequence-to-Sequence (s2s)Code: Keras (m2m) Keras (s2s)

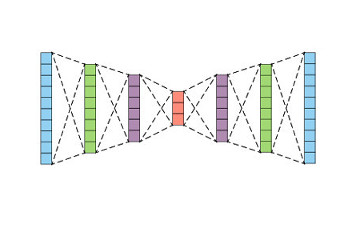

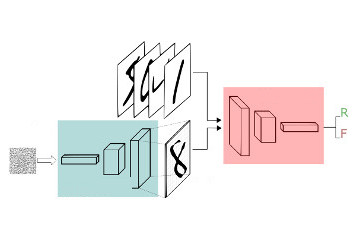

Generative Adversarial Networks

Implementaitons of simple GAN architectures